Evolutionary robotics is a technique used to syntesize robots controllers in an automated fashion. The robots are controlled by a neural network: inputs to the network come from the sensors and the outputs of the network control the robot's actuators. The parameters of the network (synaptic weights, biases, time constants etc.) are tuned by a genetic algorithm. At each iteration of the genetic algorithm, a set of candidate controllers are evaluated and scored. Scores are assigned by a fitness function, which has to be designed on the basis of the desired goal. When all the candidates received a score, they all undergo a selection and reproduction process: the higher the score of a candidate, the higher the chances of reproduction. Reproduction consists in mixing the set of parameters of two different controllers to create two new ones, and to apply random variations to some of the parameters. By iterating the selection and reproduction processes, the set of candidate controllers normally improves its performances, and the best controllers allow the robot to reach the goals.

Evolutionary robotics draws inspiration from biological evolution: the fitness function represents the selective pressure that living organisms find in nature (e.g. predators). As it happens in nature, only the fittest individual are able to reproduce, and spread their genes. The fittest individuals are those who have the characteristics that allow them to survive their environment. By reproducing at higher rates, the characteristic that prove to be advantageous spread across the population in time.

In Biology/Psychology, the capability of natural organisms to learn from the observation of conspecifics is referred to as social learning. Roboticists have developed an interest on social learning, since it might represent an effective strategy to enhance the adaptivity of a team of autonomous robots.

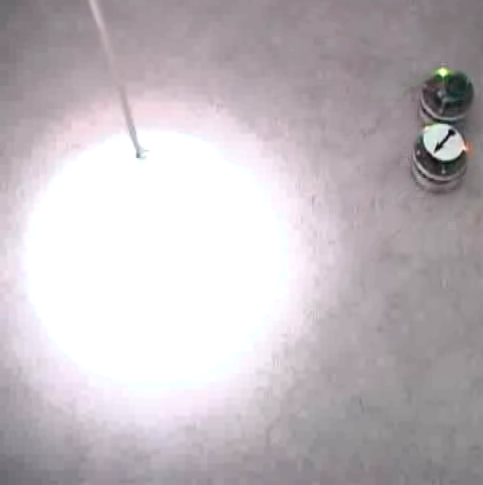

In our experiments the robots have to learn the action to be performed with respect to a light source (phototaxis or antiphototaxis), either from environmental clues, or socially, by observing the behavior of conspecifics. We show that evolutionary robotics can be employed to obtained neural controllers that show learning capabilities.

For more information about this work please refer to:

Video material related to the research work:

|

In the video the robot initially performs phototaxis, the robot learn to switch to antiphototaxis after the perception of a tone. |

|

In the video there are two robots: an expert and a learner (with the arrow). The expert robot already knows if it has to perform phototaxis or antiphototaxis. The learner robot should follow the expert using the information from the infrared proximity sensors and learn the desired behavior through observation. |