Tracking System

IRIDIA is currently developing a Tracking System for the robots experiments taking place in the Robotics Arena. The purpose of the Tracking System is to detect the position of all the robots in the Arena, together with recording and storing the frames of the whole experiments.

Hardware

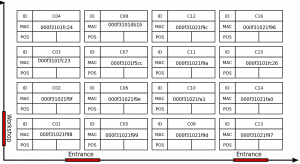

The tracking system is composed by a set of 16 cameras Prosilica GC1600 C, disposed as shown in Figure 1

. The cameras are set in order to have a collective field of view that covers the entire area of the Arena. The cameras are connected to a dedicated computer, the Arena Tracking System Server, through Ethernet connection. The Arena Tracking System Server hosts the API that the users of the Tracking System can exploit to configure, run and record their experiments. (Ref: Alessandro Stranieri, Arena Tracking System)

Software

The Arena Tracking System's API are built on top of the Halcon library. Halcon is a library by MVTec Software GmbH, used for scientific and industrial computer vision applications. It provides an extensive set of optimized operators and it comes along with a full featured IDE that allows for fast prototyping of computer vision programs, camera calibration and configuration utilities. The library also provides drivers to easily interface with a broad range of cameras. (Ref: Alessandro Stranieri, Arena Tracking System)

Licence

IRIDIA owns a Floating Development Licence. That means that the library can be installed on any computer in the local network, but can be used by at most one of them at any time.

How to use Halcon

The Halcon libraries and the development environment HDevelop can be used either remotely from the Arena Tracking System Server or locally by downloading the libraries on your machine.

Access Halcon from the Arena Tracking System Server

The Halcon library is hosted in the Arena Traking System Server. In order to use the library is necessary to access the node throgh Secure Shell. There are two available interfaces for this server:

| IP | Alias | Bandwidth | Command |

|---|---|---|---|

|

164.15.10.153 |

liebig.ulb.ac.be |

1 Gbit |

ssh -X <user>@liebig.ulb.ac.be |

|

169.254.0.200 |

10 Gbit |

ssh -X <user>@169.254.0.200 |

To access the 10 Gbit interface you must be connected to the Camera Network. To connect to the Camera Network you must plug your cable in the switch in which all the cameras are connected.

The 1 Gbit interface can be accessed directly from the internet.

Run Halcon on local machine

WARNING: this guide is meant for Halcon developers running linux 64 bit. Installation process for other OS can be found at http://www.mvtec.com/halcon/download/release/index-10.html but it has not been tested.

- Dowload halcon.tar.gz from ______________

- Extract the content in /opt on your local machine. From the folder where you downloaded halcon.tar.gz:

sudo cp halcon.tar.gz /opt cd /opt tar xf halcon.tar.gz rm halcon.tar.gz

- Add execution permission:

sudo chmod -R a+x /opt/halcon

- Set environment variables and add paths to path variables (to be done every reboot).

If you are using Bourne shell:

source /opt/halcon/.profile_halcon

If you are using C shell:

source /opt/halcon/.cshrc_halcon

- Connect your cable to the switch used by all the cameras in order to access the Camera Network (currently only the Ethernet port C4 in the Arena is connected to that switch). Then, assign to your local machine a static IP of type 169.254.x.x (but other than 169.254.100.1) with netmask 255.255.0.0.

WARNING: the Camera Network does not provide internet connection. You need to be connected also to the internet while launching Halcon (e.g. through Hashmal Wifi)

- Open a new terminal and run HDevelop:

hdevelop

How to set up the environment

In order to use the Tracking System for an experiment there are few preliminary steps you should be aware of. These steps allow you to set up a suitable configuration of the cameras and build a marker model able to track the robots, according to the environment you need for the experiment.

Set the light intensity of the Robotics Arena

Before tuning any camera, you should first set the same light intensity you are going to use during the experiment.

Tune the cameras' settings

- Run HDevelop (see How to use Halcon) and access Image Acquisition Interface:

Assistants -> Open New Image Acquisition

Be sure that the set of cameras you want to tune is switched on.

- Select Image Acquisition Interface and then Detect. In the drop-down list should automatically appear GigEVision.

- Switch to Connection tab. Select from the drop-down list Device the camera you want to tune. The IDs of the cameras are decoded in Figure 1. Then click Connect.

- HDevelop is now connected to the camera and by clicking on Live you can see real time camera streaming.

- Place a robot with marker under the camera. Zoom in on the marker and tune the camera settings until the marker is on focus and the image is clear. To change the focus you should twist the upper ring on the camera's mount. To change the brightness you can use the parameters in the Parameters tab, especially those related to exposure and gain. The same parameters mus be set in the file resources.xml (see How to run an experiment).

You should try to set up the camera in a way that the markers are clear and on focus either in the center and in the corners of the visual field of the camera.

Acquire and create a marker model

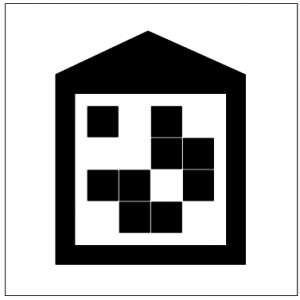

The creation and acquisition of a marker model is a critical step when using the Tracking System. The markers must be placed on top of robots. They are composed by external and internal patterns. The external pattern is the same for all the robots and it is used for detection. The internal pattern must be different for each robot and it is used for the decoding (see Figure 2 and Figure 3).

The marker for Epucks, for example, has a "house shaped" external pattern in which the roof indicates the orientation of the robot. The internal pattern is a rectangle containing six little black and white squares. Any particular configuration of black and white squares is the encoding of a single Epuck. Six squares allow experiments with up to 64 robots. However, it is safer to avoid configurations which differ from each other for only one square.

In the Tracking System decoding, the Epuck's id is calculated by giving each square a position and a value in a six bits string. In this case, the squares are allocated in the bit string, starting from the less significant bit, from the top left corner to the down right corner. Their value is 1 for white and 0 for black.

Example: in Figure 2 the ID of the robot, following squares from top left to down right, is equal to: 0(black)*2^0 + 1(white)*2^1 + 0*2^2 + 0*2^3 + 0*2^4 + 1*2^5 = 34.

The Tracking System provides a script to aquire and create a marker model. Here is explained how to acquire and create marker models for Epucks.

- In HDevelop, open the file ../hdev/marker_model_creation/aquire_marker_frame.hdev.

This script allows the user to take a picture that will be used to create the marker model.

WARNING: the choiche of the marker model is critical for the efficiency and also the correctness of the Tracking System. Your goal is to to create a model that is reliable and efficient. To do so a good habit is to orientate the marker as one of the axes of the camera, in order to have a square on the screen, instead of a rhombus, when it is time to create the model.

- Change the general path variables in the first part of the file, and set CameraID of the camera you chose for this step. Place one or more robots in the scope of the chosen camera and run the script. You now have an image from which infer the marker model.

- Open the file ../hdev/inspect_create_marker_model_from_image.hdev and modify the general path variables as done before.

- Run the script, it opens the image just stored by the previous script. Then it stops and prompts you to zoom on the marker you want to extract for the model.

- Run the script again. Now it stops and waits for you draw first the external rectangle of the marker, and then the internal rectangle of the marker. You are also requested to orientate the arrow on the rectangles along with the marker orientation. Right click to confirm your selection.

WARNING: this is also a critical part of the model creation. You should try to be particularly accurate in sizing the two rectangles. The external one must be drawn in the white space within the paper and outside the house shape, taking care not to cut the roof. The same for the internal one, in which you should draw the rectangle in the white padding around the six squares.

- Run the script againg. The model has been created and the program shows how many markers it detected in the image. This is a first test of the reliability of your marker model.

To definitely validate the model you can run a few esperiments as a test. You can also create several models and pick the best one after the tests.

To actually use your model in the experiment you must add the entry in the file resources.xml and set the right reference in the file experiment.xml (see How to run an experiment).

How to run an experiment

To run an experiment you need the File:Arena tracking system.tar.gz. In particular, you will have to modify two .xml files: resources.xml and experiment.xml. You can see an example of these files in arena_tracking_system/conf/examples/example1. You also need a main file, you can see an example in arena_tracking_system/source/examples.

resources.xml

In this file are stored all the possible resources available for the experiment, along with their parameters and settings.

The grabbers section contains all the possible frame grabbers, i.e. all the cameras and a folder in which images can be stored. Each grabber is described in its grabber section. Here is where you should set the proper parameters for the cameras, as they were in the HDevelop Image Acquisition Interface.

The trackers section is where all the available marker models are referenced. Here you can add a tracker entry for each new marker model you create.

Finally, in the cameras section, you must set the path for each camera to its handler files.

Any of these features can be recalled in the experiment.xml file by their id parameter.

experiment.xml

This file must be tailored on the experiment you are going to run.

In the arena_state_tracker section you can add as many cameras you need, by editing an new camera_state_tracker entry and providing camera state tracker id, grabber id, tracker id and camera view id. It is best practice to keep the id of each item related to the camera as CST (camera state tracker) or HC (grabber) or C (camera view) and the number of the camera.

By changing tracker id you can try different marker models.

In the experiment_record section there are two flags to allow image storing and directory overwriting, and the path to the directory you want to store the images.

main.cpp

To execute an experiment you need a main file (.cpp). You can see two examples in arena_tracking_system/source/examples. In the main file you must specify the path to the configuration files (resources.xml and experiment.xml) and a behavior for the execution in loop at every frame grabbed.

Once you compile the main you can modify the configuration files resources.xml and experiment.xml without re compiling the code.

Adapter for the cameras

In case you need an adaptor for the cameras to attach them to a standard camera mount (e.g., Manfrotto), you can create one using the laser cutter. Here are the files: ecp version dxf version