Difference between revisions of "Scenario suggestions"

m |

|||

| (8 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | = IRIDIA proposal for scenario modifications = |

|

Our proposal involves extending the scenario to allow for more sophisticated multiple hand-bot behavioural dynamics. We propose replacing the single book that had to be retrieved (and that was criticised by the referees) with multiple objects spread across some shelves. The objects would be rectangular objects with varying attributes (e.g. material, size, weight, LED colour etc). The task would then become to first identify some appropriate subset of the objects, retrieve them from the shelves, and then (possibly) to use them to build some form of structure. |

Our proposal involves extending the scenario to allow for more sophisticated multiple hand-bot behavioural dynamics. We propose replacing the single book that had to be retrieved (and that was criticised by the referees) with multiple objects spread across some shelves. The objects would be rectangular objects with varying attributes (e.g. material, size, weight, LED colour etc). The task would then become to first identify some appropriate subset of the objects, retrieve them from the shelves, and then (possibly) to use them to build some form of structure. |

||

| Line 5: | Line 5: | ||

We imagine that eye-bots could detect the presence of objects on the shelf, but not be able to discriminate between objects with different attributes - such discrimination would require close up sensing by the hand-bots. Thus the eye-bot could direct foot-bots and hand-bots to the appropriate shelves, but only the hand-bots could pick out the required subset of objects. Hand-bot discrimination could either be through close up camera based sensing, or through some kind of manipulation (lifting, give it a squeeze!). For an attribute like object length (or weight), multiple hand-bots might need to cooperate to find / select the right objects. |

We imagine that eye-bots could detect the presence of objects on the shelf, but not be able to discriminate between objects with different attributes - such discrimination would require close up sensing by the hand-bots. Thus the eye-bot could direct foot-bots and hand-bots to the appropriate shelves, but only the hand-bots could pick out the required subset of objects. Hand-bot discrimination could either be through close up camera based sensing, or through some kind of manipulation (lifting, give it a squeeze!). For an attribute like object length (or weight), multiple hand-bots might need to cooperate to find / select the right objects. |

||

| − | Depending on the parameters we use to set up this scenario, we think there are many interesting research possibilities, especially to explore swarm dynamics - both |

+ | Depending on the parameters we use to set up this scenario, we think there are many interesting research possibilities, especially to explore swarm dynamics - both heterogeneous and within a swarm of homogeneous hand-bots. For example, with a high density of hand-bots, the hand-bots could collectively search the vertical plane in a similar fashion to which the eye-bots search the ceiling plane by forming some kind of communicating network. Alternatively, when the hand-bot density is low, foot-bots could be used as markers to delineate already explored segments of the plane. Multiple hand-bots might be required to lift long, bendy, or overly heavy objects. |

| + | ==Required Modifications to Existing Swarmanoid Deliverables== |

||

| ⚫ | |||

| + | |||

| ⚫ | |||

| + | |||

| ⚫ | |||

| ⚫ | |||

* Search '''/ Select''' |

* Search '''/ Select''' |

||

** Retrieve single object |

** Retrieve single object |

||

** '''Retrieve multiple objects''' |

** '''Retrieve multiple objects''' |

||

| − | ** '''Retrieve subset of objects with certain set of attributes''' |

+ | ** '''Retrieve subset of objects with certain set of attributes''' ''(collaborative sensing)'' |

| − | ** '''Retrieve different types objects with given attributes in given ratios''' |

+ | ** '''Retrieve different types objects with given attributes in given ratios''' ''(task allocation)'' |

| − | ** '''Retrieve objects in a given order''' |

+ | ** '''Retrieve objects in a given order''' ''(networked search, task allocation)'' |

| ⚫ | |||

| − | |||

| ⚫ | |||

* Target Object Quantity |

* Target Object Quantity |

||

| Line 26: | Line 28: | ||

* '''Target Object Attributes''' |

* '''Target Object Attributes''' |

||

** '''All objects are of the same type''' |

** '''All objects are of the same type''' |

||

| − | ** '''Objects have varying attributes (material, length, weight, etc)''' |

+ | ** '''Objects have varying attributes (material, length, weight, etc)''' ''(swarm h-bot behaviour, h-bot f-bot cooperation)'' |

* Target Object Movability |

* Target Object Movability |

||

| Line 39: | Line 41: | ||

** Target on floor |

** Target on floor |

||

** Target raised (e.g., on shelf or table) (default) |

** Target raised (e.g., on shelf or table) (default) |

||

| + | ** '''Multiple objects sparsely distributed''' |

||

| + | ** '''Multiple objects densely distributed''' ''(Eye-bot can't tell where one object stops and the other starts)'' |

||

| − | * Target Object |

+ | * Target Object Visibility (size, luminosity, etc.) |

** Easily detectableâ Object designed to make recognition easy (default) |

** Easily detectableâ Object designed to make recognition easy (default) |

||

** Not easy to detect |

** Not easy to detect |

||

| + | |||

| − | ** '''Attributes detectable from far away''' |

||

| + | == Example == |

||

| − | ** '''Attributes only detectable by close up sensing (vision / manipulation)''' |

||

| + | |||

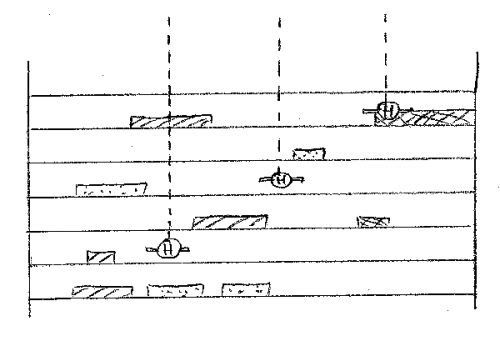

| + | [[Image:Example_new_scenario_smaller.png|right|thumb|500px|A swarm of hand-bots searching for objects of a certain type in a shelf.]] |

||

| + | Example Task: retrieve all soft objects |

||

| + | |||

| + | * Target objects look the same |

||

| + | * Objects can either be hard or soft |

||

| + | * Objects are distributed over multiple shelves (but not all) |

||

| + | * Hand-bot density is low |

||

| + | |||

| + | The eye-bot is required to explore the environment in order to identify the shelves which holds the objects and attract foot-bot/hand-bot groups to it. Foot-bots transport the hand-bots to the shelf and the objects back. |

||

| + | The hand-bots have to communicate which robots searches which part of the shelf. |

||

| + | Due to low hand-bot density, foot-bots are used to mark already searched areas. |

||

| + | Hand-bots need to squeeze each objects that look like the target object in order to find out which are the soft ones. |

||

Latest revision as of 16:03, 9 May 2008

IRIDIA proposal for scenario modifications

Our proposal involves extending the scenario to allow for more sophisticated multiple hand-bot behavioural dynamics. We propose replacing the single book that had to be retrieved (and that was criticised by the referees) with multiple objects spread across some shelves. The objects would be rectangular objects with varying attributes (e.g. material, size, weight, LED colour etc). The task would then become to first identify some appropriate subset of the objects, retrieve them from the shelves, and then (possibly) to use them to build some form of structure.

We imagine that eye-bots could detect the presence of objects on the shelf, but not be able to discriminate between objects with different attributes - such discrimination would require close up sensing by the hand-bots. Thus the eye-bot could direct foot-bots and hand-bots to the appropriate shelves, but only the hand-bots could pick out the required subset of objects. Hand-bot discrimination could either be through close up camera based sensing, or through some kind of manipulation (lifting, give it a squeeze!). For an attribute like object length (or weight), multiple hand-bots might need to cooperate to find / select the right objects.

Depending on the parameters we use to set up this scenario, we think there are many interesting research possibilities, especially to explore swarm dynamics - both heterogeneous and within a swarm of homogeneous hand-bots. For example, with a high density of hand-bots, the hand-bots could collectively search the vertical plane in a similar fashion to which the eye-bots search the ceiling plane by forming some kind of communicating network. Alternatively, when the hand-bot density is low, foot-bots could be used as markers to delineate already explored segments of the plane. Multiple hand-bots might be required to lift long, bendy, or overly heavy objects.

Required Modifications to Existing Swarmanoid Deliverables

To integrate this proposal into the existing Swarmanoid documentation, we propose to extend the existing task and environment complexity matrices as follows. Modifications are indicated in bold. Explanations/justifications for modifications in italics.

Task Complexity Parameters

- Search / Select

- Retrieve single object

- Retrieve multiple objects

- Retrieve subset of objects with certain set of attributes (collaborative sensing)

- Retrieve different types objects with given attributes in given ratios (task allocation)

- Retrieve objects in a given order (networked search, task allocation)

Environment Complexity Parameters (target object parameters)

- Target Object Quantity

- 1 object to be found and retrieved (default)

- Many objects to be found and retrieved

- Target Object Attributes

- All objects are of the same type

- Objects have varying attributes (material, length, weight, etc) (swarm h-bot behaviour, h-bot f-bot cooperation)

- Target Object Movability

- Single robot can move object

- Cooperation required due to nature of object (e.g., too heavy /long for a single robot) (default)

- Target Object Grippability

- Gripping easyâ Object designed to be easily grippable (default)

- Gripping hard or requires cooperation

- Target Object Location

- Target on floor

- Target raised (e.g., on shelf or table) (default)

- Multiple objects sparsely distributed

- Multiple objects densely distributed (Eye-bot can't tell where one object stops and the other starts)

- Target Object Visibility (size, luminosity, etc.)

- Easily detectableâ Object designed to make recognition easy (default)

- Not easy to detect

Example

Example Task: retrieve all soft objects

- Target objects look the same

- Objects can either be hard or soft

- Objects are distributed over multiple shelves (but not all)

- Hand-bot density is low

The eye-bot is required to explore the environment in order to identify the shelves which holds the objects and attract foot-bot/hand-bot groups to it. Foot-bots transport the hand-bots to the shelf and the objects back. The hand-bots have to communicate which robots searches which part of the shelf. Due to low hand-bot density, foot-bots are used to mark already searched areas. Hand-bots need to squeeze each objects that look like the target object in order to find out which are the soft ones.