Difference between revisions of "Particle Swarm Optimization - Scholarpedia Draft"

| Line 23: | Line 23: | ||

<math>\Theta^* = \underset{\vec{\theta} \in \Theta}{\operatorname{arg\,min}} \, f(\vec{\theta}) = \{ \vec{\theta}^* \in \Theta \colon f(\vec{\theta}^*) \leq f(\vec{\theta}) \,\,\,\,\,\,\forall \vec{\theta} \in \Theta\}\,,</math> |

<math>\Theta^* = \underset{\vec{\theta} \in \Theta}{\operatorname{arg\,min}} \, f(\vec{\theta}) = \{ \vec{\theta}^* \in \Theta \colon f(\vec{\theta}^*) \leq f(\vec{\theta}) \,\,\,\,\,\,\forall \vec{\theta} \in \Theta\}\,,</math> |

||

| − | where <math>\vec{\theta}</math> is an <math>n</math>-dimensional vector that belongs to the set of feasible solutions <math>\Theta</math> (also called solution space). |

+ | where <math>\vec{\theta}</math> is an <math>n</math>-dimensional vector that belongs to the set of feasible solutions <math>\Theta</math> (also called solution space). |

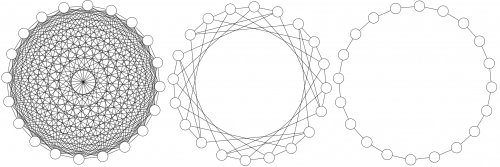

[[Image:Topologies.png|thumb|500px|right|Example population topologies. The leftmost picture depicts a fully connected topology, that is, <math>\mathcal{N}_i = \mathcal{P} \setminus \{p_i\}\,\,\forall p_i \in \mathcal{P}</math>. The picture in the center depicts a so-called von Neumann topology, in which <math>|\mathcal{N}_i| = 4\,\,\forall p_i \in \mathcal{P}</math>. The rightmost picture depicts a ring topology in which each particle is neighbor to two other particles.]] |

[[Image:Topologies.png|thumb|500px|right|Example population topologies. The leftmost picture depicts a fully connected topology, that is, <math>\mathcal{N}_i = \mathcal{P} \setminus \{p_i\}\,\,\forall p_i \in \mathcal{P}</math>. The picture in the center depicts a so-called von Neumann topology, in which <math>|\mathcal{N}_i| = 4\,\,\forall p_i \in \mathcal{P}</math>. The rightmost picture depicts a ring topology in which each particle is neighbor to two other particles.]] |

||

Revision as of 17:47, 2 October 2008

Particle swarm optimization (PSO) is a population-based stochastic approach for tackling continuous and discrete optimization problems.

In particle swarm optimization, simple software agents, called particles move in the solution space of an optimization problem. The position of a particle represents a candidate solution to the optimization problem at hand. Particles search for better positions in the solution space by changing their velocity according to rules originally inspired by behavioral models of bird flocking.

Particle swarm optimization belongs to the class of swarm intelligence techniques that are used to solve optimization problems.

History

Particle swarm optimization was introduced by Kennedy and Eberhart (1995). It has roots in the simulation of social behaviors using tools and ideas taken from computer graphics and social psychology research.

Within the field of computer graphics, the first antecedents of particle swarm optimization can be traced back to the work of Reeves (1983), who proposed particle systems to model objects that are dynamic and cannot be easily represented by polygons or surfaces. Examples of such objects are fire, smoke, water and clouds. In these models, particles are independent of each other and their movement is governed by a set of rules. Some years later, Reynolds (1987) used a particle system to simulate the collective behavior of a flock of birds. In a similar kind of simulation, Heppner and Grenander (1990) included a "roost" that was attractive to the simulated birds. Both models inspired the set of rules that were later used in the original particle swarm optimization algorithm.

Social psychology research was another source of inspiration in the development of the first particle swarm optimization algorithm. The rules that govern the movement of the particles in a problem's solution space can also be seen as a model of human social behavior in which individuals adjust their beliefs and attitudes to conform with those of their peers (Kennedy & Eberhart 1995).

Standard PSO algorithm

Preliminaries

The problem of minimizing <ref name="minimization">Without loss of generality, the presentation considers only minimization problems.</ref> the function <math>f: \Theta \to \mathbb{R}</math> with <math>\Theta \subseteq \mathbb{R}^n</math> can be stated as finding the set

<math>\Theta^* = \underset{\vec{\theta} \in \Theta}{\operatorname{arg\,min}} \, f(\vec{\theta}) = \{ \vec{\theta}^* \in \Theta \colon f(\vec{\theta}^*) \leq f(\vec{\theta}) \,\,\,\,\,\,\forall \vec{\theta} \in \Theta\}\,,</math>

where <math>\vec{\theta}</math> is an <math>n</math>-dimensional vector that belongs to the set of feasible solutions <math>\Theta</math> (also called solution space).

Let <math>\mathcal{P} = \{p_{1},p_{2},\ldots,p_{k}\}</math> be the population of particles (also referred to as swarm). At any time step <math>t</math>, a particle <math>p_i</math> has a position <math>\vec{x}^{\,t}_i</math> and a velocity <math>\vec{v}^{\,t}_i</math> associated to it. The best position that particle <math>p_i</math> has ever visited until time step <math>t</math> is represented by vector <math>\vec{b}^{\,t}_i</math> (also known as a particle's personal best). Moreover, a particle <math>p_i</math> receives information from its neighborhood, which is defined as the set <math>\mathcal{N}_i \subseteq \mathcal{P}</math>. Note that a particle can belong to its own neighborhood. In the standard particle swarm optimization algorithm, the particles' neighborhood relations are commonly represented as a graph <math>G=\{V,E\}</math>, where each vertex in <math>V</math> corresponds to a particle in the swarm and each edge in <math>E</math> establishes a neighbor relation between a pair of particles. The resulting graph is commonly referred to as the swarm's population topology.

The algorithm

The algorithm starts with the random initialization of the particles' positions and velocities within an initialization space <math>\Theta^\prime \subseteq \Theta</math>. During the main loop of the algorithm, the particles' velocities and positions are iteratively updated until a stopping criterion is met.

The update rules are:

<math>\vec{v}^{\,t+1}_i = w\vec{v}^{\,t}_i + \varphi_2\vec{U}^{\,t}_1(\vec{b}^{\,t}_i - \vec{x}^{\,t}_i) + \varphi_2\vec{U}^{\,t}_2(\vec{l}^{\,t}_i - \vec{x}^{\,t}_i) \,,</math>

<math>\vec{x}^{\,t+1}_i = \vec{x}^{\,t}_i +\vec{v}^{\,t+1}_i \,,</math>

where <math>w</math> is a parameter called inertia weight (Shi and Eberhart 1999), <math>\varphi_1</math> and <math>\varphi_2</math> are two parameters called acceleration coefficients, <math>\vec{U}^{\,t}_1</math> and <math>\vec{U}^{\,t}_2</math> are two <math>n \times n</math> diagonal matrices with in-diagonal elements distributed in the interval <math>[0,1)\,</math> uniformly at random. Every iteration, these matrices are regenerated, that is, <math>\vec{U}^{\,t+1}_{1,2} \neq \vec{U}^{\,t}_{1,2}</math>. Vector <math>\vec{l}^{\,t}_i</math> is the best position ever found by any particle in the neighborhood of particle <math>p_i</math>, that is, <math>f(\vec{l}^{\,t}_i) \leq f(\vec{b}^{\,t}_j) \,\,\, \forall p_j \in \mathcal{N}_i</math>. If the values of <math>w</math>, <math>\varphi_1</math> and <math>\varphi_2</math> are properly chosen, the algorithm is guaranteed to be stable (Clerc and Kennedy 2002).

A pseudocode version of the standard PSO algorithm is shown below:

:Inputs Objective function <math>f:\Theta \to \mathbb{R}</math>, the initialization domain <math>\Theta^\prime \subseteq \Theta</math>, the set of particles <math>\mathcal{P} \colon |\mathcal{P}| = k</math>,

the parameters <math>w</math>, <math>\varphi_1</math>, and <math>\varphi_2</math>, and the stopping criterion <math>S</math>

:Output Best solution found

// Initialization

Set t := 0

for i := 1 to k do

Initialize <math>\mathcal{N}_i</math> to a subset of <math>\mathcal{P}</math> according to the desired topology

Initialize <math>\vec{x}^{\,t}_i</math> and <math>\vec{v}^{\,t}_i</math> randomly within <math>\Theta^\prime</math>

Set <math>\vec{b}^{\,t}_i = \vec{x}^{\,t}_i</math>

end for

// Main loop

while <math>S</math> is not satisfied do

// Velocity and position update loop

for i := 1 to k do

Set <math>\vec{l}^{\,t}_i</math> := <math>p_i</math>'s best neighbor according to <math>f</math>

Generate random matrices <math>\vec{U}^{\,t}_1</math> and <math>\vec{U}^{\,t}_1</math>

Set <math>\vec{v}^{\,t+1}_i</math> := <math>w\vec{v}^{\,t}_i + \varphi_1\vec{U}^{\,t}_1(\vec{b}^{\,t}_i - \vec{x}^{\,t}_i) + \varphi_2\vec{U}^{\,t}_2(\vec{l}^{\,t}_i - \vec{x}^{\,t}_i)</math>

Set <math>\vec{x}^{\,t+1}_i</math> := <math>\vec{x}^{\,t}_i + \vec{v}^{\,t+1}_i</math>

end for

// Solution update loop

for i := 1 to k do

if <math>f(\vec{x}^{\,t}_i) \leq f(\vec{b}^{\,t}_i)</math>

Set <math>\vec{b}^{\,t}_i</math> := <math>\vec{x}^{\,t}_i</math>

end if

end for

Set t := t + 1

end while

Main PSO variants

The original particle swarm optimization algorithm has undergone a number of changes since it was first proposed. Most of these changes affect the way the particles' velocity is updated. In the following subsections, we briefly describe some of the most important developments. For a more detailed description of many of the existing particle swarm optimization variants, see (Kennedy and Eberhart 2001, Engelbrecht 2005, Clerc 2006 and Poli et al. 2007).

Discrete PSO

Most particle swarm optimization algorithms are designed to search in continuous domains. However, there are a number of variants that operate in discrete spaces. The first variant that worked on discrete domains was the binary particle swarm optimization algorithm (Kennedy and Eberhart 1997). In this algorithm, a particle's position is discrete but its velocity is continuous. The <math>j</math>th component of a particle's velocity vector is used to compute the probability with which the <math>j</math>th component of the particle's position vector takes a value of 1. Velocities are updated as in the standard PSO algorithm, but positions are updated using the following rule

<math> x^{t+1}_{ij} = \begin{cases} 1 & \mbox{if } r < sig(v^{t+1}_{ij}),\\ 0 & \mbox{otherwise,} \end{cases} </math>

where <math>r</math> is a uniformly distributed random number in the range <math>[0,1)\,</math> and

<math> sig(x) = \frac{1}{1+e^{-x}}\,. </math>

Bare bones PSO

The bare-bones particle swarm (Kennedy 2003) is a variant of the particle swarm optimization algorithm in which the velocity- and position-update rules are substituted by a procedure that samples a parametric probability density function.

In the bare bones particle swarm optimization algorithm, a particle's position update rule in the <math>j</math>th dimension is <math> x^{t+1}_{ij} = N\left(\mu^{t} ,\sigma^{\,t}\right)\,, </math> where <math>N</math> is a normal distribution with

<math> \begin{array}{ccc} \mu^{t} &=& \frac{b^{t}_{ij} + l^{t}_{ij}}{2} \,, \\ \sigma^{t} & = & |b^{t}_{ij} - l^{t}_{ij}| \,. \end{array} </math>

Fully informed PSO

In the standard particle swarm optimization algorithm, a particle is attracted toward its best neighbor. A variant in which a particle uses the information provided by all its neighbors in order to update its velocity is called the fully informed particle swarm (FIPS) (Mendes et al. 2004).

In the fully informed particle swarm optimization algorithm, the velocity-update rule is

<math> \vec{v}^{\,t+1}_i = w\vec{v}^{\,t}_i + \frac{\varphi}{|\mathcal{N}_i|}\sum_{p_j \in \mathcal{N}_i}\mathcal{W}(\vec{b}^{\,t}_j)\vec{U}^{\,t}_j(\vec{b}^{\,t}_j-\vec{x}^{\,t}_i) \,, </math>

where <math>w</math> is a parameter called the inertia weight, <math>\varphi</math> is a parameter called acceleration coefficient, and <math>\mathcal{W} \colon \Theta \to [0,1]</math> is a function that weighs the contribution of a particle's personal best position to the movement of the target particle based on its relative quality.

Applications of PSO and Current Trends

Particle swarm optimization algorithms have been used successfully in the solution of single and multiobjective problems (Reyes-Sierra and Coello Coello 2006). The first practical application of a PSO algorithm was in the field of neural network training and was published together with the algorithm itself (Kennedy and Eberhart 1995). Many more areas of application have been explored ever since, including telecommunications, control, data mining, design, combinatorial optimization, power systems, signal processing, and many others. To date, there are hundreds of publications reporting applications of particle swarm optimization algorithms. For a review, see (Poli 2008).

A number of research directions are currently pursued, including:

- Theoretical aspects

- Matching algorithms (or algorithmic components) to problems

- Application to more and/or different kind of problems

- Parameter selection

- Comparisons between PSO variants and other algorithms

- New variants

Notes

<references />

References

M. Clerc and J. Kennedy. The particle swarm-explosion, stability and convergence in a multidimensional complex space. IEEE Transactions on Evolutionary Computation, 6(1):58-73, 2002.

M. Clerc. Particle Swarm Optimization. ISTE, London, UK, 2006.

A. P. Engelbrecht. Fundamentals of Computational Swarm Intelligence. John Wiley & Sons, Chichester, UK, 2005.

F. Heppner and U. Grenander. A stochastic nonlinear model for coordinated bird flocks. The Ubiquity of Chaos. AAAS Publications, Washington, DC, 1990.

J. Kennedy. Bare bones particle swarms. In Proceedings of the IEEE Swarm Intelligence Symposium, pages 80-87, IEEE Press, Piscataway, NJ, 2003.

J. Kennedy and R. Eberhart. Particle swarm optimization. In Proceedings of IEEE International Conference on Neural Networks, pages 1942-1948, IEEE Press, Piscataway, NJ, 1995.

J. Kennedy and R. Eberhart. A discrete binary version of the particle swarm algorithm. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, pages 4104-4108, IEEE Press, Piscataway, NJ, 1997.

J. Kennedy, and R. Eberhart. Swarm Intelligence. Morgan Kaufmann, San Francisco, CA, 2001.

R. Mendes, J. Kennedy, and J. Neves. The fully informed particle swarm: simpler, maybe better. IEEE Transactions on Evolutionary Computation, 8(3):204-210, 2004.

R. Poli. Analysis of the publications on the applications of particle swarm optimisation. Journal of Artificial Evolution and Applications, Article ID 685175, 10 pages, 2008.

R. Poli, J. Kennedy, and T. Blackwell. Particle swarm optimization. An overview. Swarm Intelligence, 1(1):33-57, 2007.

W. T. Reeves. Particle systems-a technique for modeling a class of fuzzy objects. ACM Transactions on Graphics, 2(2):91-108, 1983.

M. Reyes-Sierra, M. and C. A. Coello Coello. Multi-objective particle swarm optimizers: A survey of the state-of-the-art. International Journal of Computational Intelligence Research, 2(3):287-308, 2006.

C. W. Reynolds. Flocks, herds, and schools: A distributed behavioral model. ACM Computer Graphics,21(4):25-34, 1987.

Y. Shi and R. Eberhart. A modified particle swarm optimizer. In Proceedings of the IEEE Congress on Evolutionary Computation, pages 69-73, IEEE Press, Piscataway, NJ, 1999.

External Links

- Papers on PSO are published regularly in many journals and conferences:

- The main journal reporting research on PSO is Swarm Intelligence. Other journals also publish articles about PSO. These include the IEEE Transactions series, Natural Computing, Structural and Multidisciplinary Optimization, Soft Computing and others.

- ANTS - From Ant Colonies to Artificial Ants: A Series of International Workshops on Ant Algorithms. This biannual series of workshops, held for the first time in 1998, is the oldest conference in the ACO and swarm intelligence fields.

- The IEEE Swarm Intelligence Symposia, started in 2003.

- Special sessions or special tracks on PSO are organized in many conferences. Examples are the IEEE Congress on Evolutionary Computation (CEC) and the Genetic and Evolutionary Computation (GECCO) series of conferences.

- Papers on PSO are also published in the proceedings of many other conferences such as Parallel Problem Solving from Nature conferences, the European Workshops on the Applications of Evolutionary Computation and many others.

See also

Optimization, Stochastic Optimization, Swarm Intelligence, Ant Colony Optimization