Difference between revisions of "IRIDIA cluster architecture"

Christensen (talk | contribs) |

|||

| (52 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == |

== Introduction == |

||

| + | [[Image:Rack_cluster.jpg|thumb|The rack cluster]] |

||

| − | |||

| + | [[Image:Shelf_cluster.jpg|thumb|The shelf cluster]] |

||

The cluster was built in 2002 and has been extended and modified since. Currently it consists of two servers and a number of nodes with disks and a number with out disks. The disk-less nodes are the normal PC looking boxes on the shelves in the server room, while the nodes with disks are the ones in the rack. |

The cluster was built in 2002 and has been extended and modified since. Currently it consists of two servers and a number of nodes with disks and a number with out disks. The disk-less nodes are the normal PC looking boxes on the shelves in the server room, while the nodes with disks are the ones in the rack. |

||

| Line 8: | Line 9: | ||

* NTP |

* NTP |

||

* NIS |

* NIS |

||

| − | * Sun Grid Engine |

+ | * Sun Grid Engine (the actual scheduler is running on polyphemus) |

* Vortex License |

* Vortex License |

||

* DHCP for the computer on the shelves |

* DHCP for the computer on the shelves |

||

* TFTP for diskless booting |

* TFTP for diskless booting |

||

| − | Normally, users will log on to |

+ | Normally, users will log on to polyphemus and submit jobs using the Sun Grid Engine. |

== Physical setup == |

== Physical setup == |

||

| + | {| width="710px" |

||

| + | |- |

||

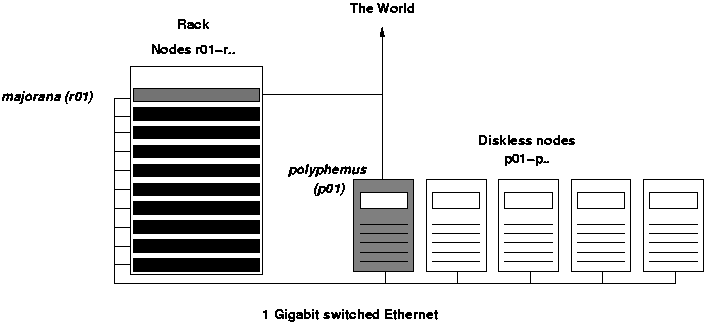

| + | |[[Image:Cluster_architecture.png]] |

||

| + | | |

||

| + | |- |

||

| + | |''The figure shows the cluster architecture, notice that each of the two servers, majorana and polyphemus, double as computing nodes in each of their part of the cluster. That is majorana is also r01 while polyphemus is also p01. |

||

| + | | |

||

| + | |- |

||

| + | |} |

||

== Redundancy and replication == |

== Redundancy and replication == |

||

| − | ''Notice that none of the things mentioned below has actually been installed - this |

+ | ''Notice that only some none of the things mentioned below has actually been installed - this should be considered merely a wish list or a list of ideas'' |

Two servers provide two access points to the cluster. The services |

Two servers provide two access points to the cluster. The services |

||

| Line 34: | Line 44: | ||

* Sun Grid Engine (SGE) can be run only on one computer (polyphemus). All the nodes access its data via NFS. majorana can copy SGE directory daily, but if polyphemus crashes, all the nodes must be instructed to mount the new directory on majorana. |

* Sun Grid Engine (SGE) can be run only on one computer (polyphemus). All the nodes access its data via NFS. majorana can copy SGE directory daily, but if polyphemus crashes, all the nodes must be instructed to mount the new directory on majorana. |

||

* ''/home'' directories. There can be only one NFS server in the network. majorana was chosen because it react faster: it has 2 CPUs, and when one is busy writing, the other can still process other incoming requests. Both majorana and polyphemus use RAID architecture to prevent data loss. The only problem is if majorana is not reachable any more. In this case, each process on the nodes that tries to access \texttt{/home} will be stopped till majorana comes up again. |

* ''/home'' directories. There can be only one NFS server in the network. majorana was chosen because it react faster: it has 2 CPUs, and when one is busy writing, the other can still process other incoming requests. Both majorana and polyphemus use RAID architecture to prevent data loss. The only problem is if majorana is not reachable any more. In this case, each process on the nodes that tries to access \texttt{/home} will be stopped till majorana comes up again. |

||

| − | * The root directories of the diskless nodes are on |

+ | * The root directories of the diskless nodes are on majorana. If majorana is not reachable, these nodes will be blocked waiting for majorana to come up again. polyphemus could keep a backup the these directories, but if the administrator wants to mount the backup directories on polyphemus, the nodes must be manually rebooted because they are note reachable via SSH. The DHCP configuration must also be changed to give the new mount point of the backup directories to the nodes. |

| + | * majorana is also a DHCP server for all the local network. Two groups are defined in its configuration file, one for the diskless and one for the computers in the rack. |

||

| − | * In this solutions, two different DHCP servers deals with the two groups because of the different way of maintaining and updating the nodes. Anyway, only one server could do the same job. In case of failure of one server, the other can restart the DHCP server with a new configuration to deal with the whole cluster. |

||

| + | |||

| + | |||

| + | '''Note: correct sequence to switch on and off the cluster''' |

||

| + | The correct sequence to switch on the cluster is: majorana first, polyphemus second (as root give the command "mount -a"), all the nodes third. |

||

| + | |||

| + | The correct sequence to switch off the cluster is: all the nodes first, polyphemus second, majorana third. |

||

| + | |||

| + | == Electric system and power lines == |

||

| + | [[Image:NewLine21.jpg|thumb|Power Line 21]] |

||

| + | [[Image:Q5-2.jpg|thumb|Power Line Q5]] |

||

| + | [[Image:Rack-power-lines.jpg|thumb|Power Lines Q12, Q13, Q14, Q15, 22]] |

||

| + | |||

| + | Currently the cluster is connected to the power system through 6 lines: 21, Q5, Q12, Q13, Q14, Q15, and 22. |

||

| + | |||

| + | *21: connects 1 Back-UPS CS 500 APC (300 Watts / 500 VA - Typical Backup Time at Full Load 2.4 minutes) connected to the server polyphemus + 1 monitor 19" NEC MultiSync 95F (2.2A), and 1 switch D-Link DGS-1004T (8W) |

||

| + | *Q5: connects 1 switch D-Link DGS-1016T (40W), 1 switch D-Link DGS-1024T (75W) and 4 diskless nodes: p02, p03, p04, p05 |

||

| + | *Q12: connects 5 nodes (1,3A each) of the rack: r29, r30, r31, r32, r33 |

||

| + | *Q13: connects 8 nodes (1,3A each) of the rack: r21, r22, r23, r24, r25, r26, r27, r28 |

||

| + | *Q14: connects 7 nodes (1,3A each) of the rack: r07, r08, r09, r10, r11, r12, r13 |

||

| + | *Q15: connects 7 nodes (1,3A each) of the rack: r14, r15, r16, r17, r18, r19, r20 |

||

| + | *22: connects 5 nodes (1,3A each) of the rack: r02, r03, r04, r05, r06, 1 switch Netgear GS-524T (70W), and 1 APC Smart-UPS 750 (480W) connected to the server majorana (1,5A) |

||

Latest revision as of 10:46, 17 June 2006

Introduction

The cluster was built in 2002 and has been extended and modified since. Currently it consists of two servers and a number of nodes with disks and a number with out disks. The disk-less nodes are the normal PC looking boxes on the shelves in the server room, while the nodes with disks are the ones in the rack.

Currently, servers as well as nodes run a 32-bit Debian GNU/Linux, however the nodes in the rack are dual Opterons so that might change at some point in the future.

majorana is the main server and provides the following services:

- NTP

- NIS

- Sun Grid Engine (the actual scheduler is running on polyphemus)

- Vortex License

- DHCP for the computer on the shelves

- TFTP for diskless booting

Normally, users will log on to polyphemus and submit jobs using the Sun Grid Engine.

Physical setup

Redundancy and replication

Notice that only some none of the things mentioned below has actually been installed - this should be considered merely a wish list or a list of ideas

Two servers provide two access points to the cluster. The services required by the nodes are splitted on the servers in order to reduce the workload and to improve robustness to failures. Here there is a description of which services can be duplicated and what needs to be done in case of crashes.

- The NIS protocol already includes the presence of more that one server, of which only one is the master server. The others are slave servers that are a copy of the master and that work only when the master is unreachable.

- NTP is used to keep the clocks of the cluster syncronized. The clients can access only one server (to check!), although there might be more in the network. A failure in the NTP server is not considered critical, because it will take days before the clocks of the clients differ unreasonably. Therefore, only one server is enough

- The Vortex License server cannot be copied, and it is already configured so that it can run only on polyphemus. If polyphemus crashes, it is still possible to start it on majorana by changing the MAC address of the latter to 00:0C:6E:02:41:C3 (polyphemus's MAC address). majorana can copy the file needed to run the server on a daily basis.

- Sun Grid Engine (SGE) can be run only on one computer (polyphemus). All the nodes access its data via NFS. majorana can copy SGE directory daily, but if polyphemus crashes, all the nodes must be instructed to mount the new directory on majorana.

- /home directories. There can be only one NFS server in the network. majorana was chosen because it react faster: it has 2 CPUs, and when one is busy writing, the other can still process other incoming requests. Both majorana and polyphemus use RAID architecture to prevent data loss. The only problem is if majorana is not reachable any more. In this case, each process on the nodes that tries to access \texttt{/home} will be stopped till majorana comes up again.

- The root directories of the diskless nodes are on majorana. If majorana is not reachable, these nodes will be blocked waiting for majorana to come up again. polyphemus could keep a backup the these directories, but if the administrator wants to mount the backup directories on polyphemus, the nodes must be manually rebooted because they are note reachable via SSH. The DHCP configuration must also be changed to give the new mount point of the backup directories to the nodes.

- majorana is also a DHCP server for all the local network. Two groups are defined in its configuration file, one for the diskless and one for the computers in the rack.

Note: correct sequence to switch on and off the cluster

The correct sequence to switch on the cluster is: majorana first, polyphemus second (as root give the command "mount -a"), all the nodes third.

The correct sequence to switch off the cluster is: all the nodes first, polyphemus second, majorana third.

Electric system and power lines

Currently the cluster is connected to the power system through 6 lines: 21, Q5, Q12, Q13, Q14, Q15, and 22.

- 21: connects 1 Back-UPS CS 500 APC (300 Watts / 500 VA - Typical Backup Time at Full Load 2.4 minutes) connected to the server polyphemus + 1 monitor 19" NEC MultiSync 95F (2.2A), and 1 switch D-Link DGS-1004T (8W)

- Q5: connects 1 switch D-Link DGS-1016T (40W), 1 switch D-Link DGS-1024T (75W) and 4 diskless nodes: p02, p03, p04, p05

- Q12: connects 5 nodes (1,3A each) of the rack: r29, r30, r31, r32, r33

- Q13: connects 8 nodes (1,3A each) of the rack: r21, r22, r23, r24, r25, r26, r27, r28

- Q14: connects 7 nodes (1,3A each) of the rack: r07, r08, r09, r10, r11, r12, r13

- Q15: connects 7 nodes (1,3A each) of the rack: r14, r15, r16, r17, r18, r19, r20

- 22: connects 5 nodes (1,3A each) of the rack: r02, r03, r04, r05, r06, 1 switch Netgear GS-524T (70W), and 1 APC Smart-UPS 750 (480W) connected to the server majorana (1,5A)