|

|

by Leslie Pérez Cáceres, Manuel López Ibáñez,

Thomas Stützle

2013

| Table of Contents |

Ant Colony Optimization (ACO) has been developed originally as an

algorithmic technique for tackling NP-hard combinatorial

optimization problems. Most of the research on ACO has focused on algorithmic

variants that obtain high-quality solutions when computation time

allows the evaluation of a very large number of candidate solutions,

often in the order of millions. However, in situations where the

evaluation of solutions is very costly in computational terms, only a

relatively small number of solutions can be evaluated within a

reasonable time. This situation may arise, for example, when

evaluation requires simulation. In such a situation, the current

knowledge on which are the best ACO strategies and which is the range

of the best settings for various ACO parameters may not be applicable anymore. In

this paper, we start an initial investigation of how different ACO

algorithms behave if they have available only a very limited number

of solution evaluations, say, 1000. We show that, after tuning the

parameter settings for this type of scenario, still the original Ant

System performs relatively poor compared to other ACO

strategies. However, the best parameter settings for such a small

evaluation budget are very different from the standard

recommendations available in the literature..

Keywords: ant colony optimization, ACOTSP, parameter setting

In this work we used five ACO algorithms:

The use implementation of these algorithms corresponds to ACOTSP software, a freely available framework that contains implementation of well known ACO algorithms. For the TSP the algorithm used in these experiments is the version 1.02 of ACOTSP. For QAP we use an adaptation of ACOTSP for solving QAP. We also use a version that allows online adaptation of parameters as described in [5].

| algorithm | ants | alpha | rho | q0 | beta | rasrank | elitistants |

|---|---|---|---|---|---|---|---|

| AS | 75/80 | 0.5 | 0.00 | 1 | 5/0 | − | − |

| EAS | 75/80 | 0.5 | 0.00 | 1 | 2/0 | − | #ants |

| RAS | 75/80 | 0.1 | 0.00 | 1 | 2/0 | 6 | − |

| MMAS | 75/80 | 0.02 | 0.00 | 1 | 2/0 | − | − |

| ACS | 10/10 | 0.1 | 0.90 | 1 | 5/0 | − | − |

We solved two classical NP−hard combinatorial optimization problems.

The relevant parameters for every algorithm/problem are summarized in the table below.

| Common to all | RAS and EAS | TSP | ||||

|---|---|---|---|---|---|---|

| ants | alpha | rho | q0 | rasrank | elitistants | beta |

| [5, 100] | [0, 10] | [0.01, 1] | [0, 1] | [1, 100] | [1, 175] | [0, 10] |

We performed the tuning of the algorithms described for the tackled problems, we used irace as auotmatic configuration tool. Irace is available as an R package.

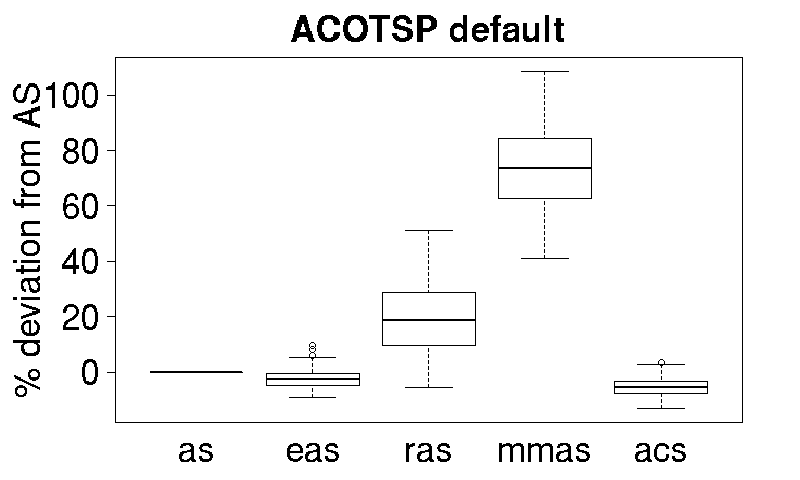

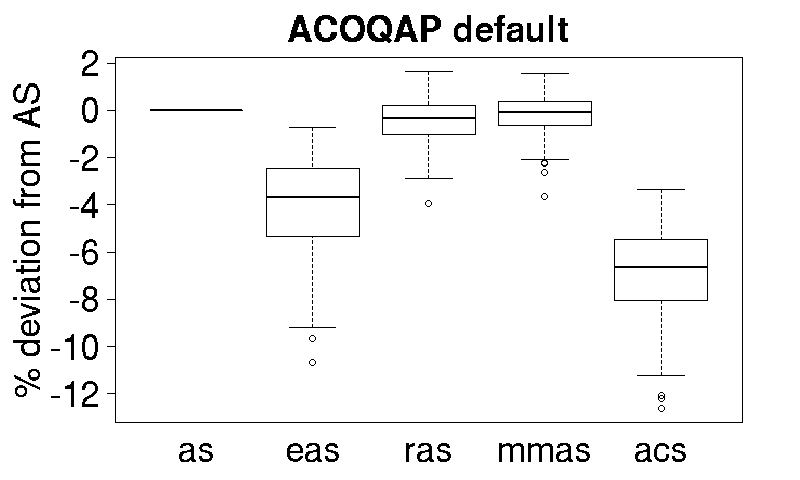

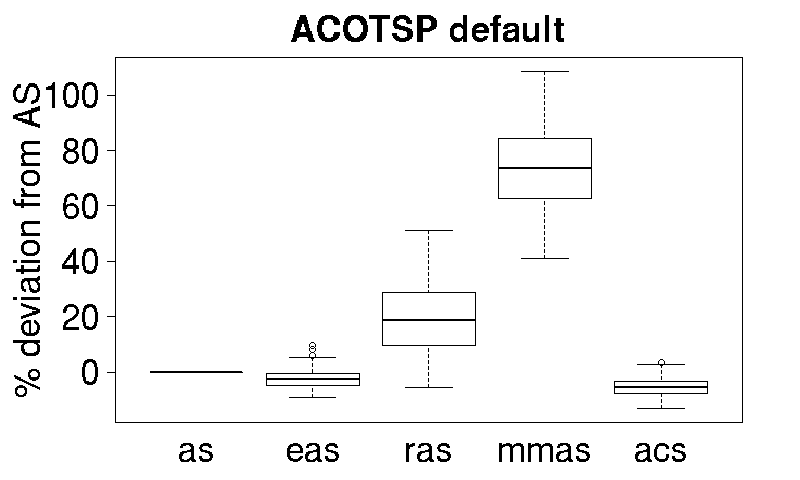

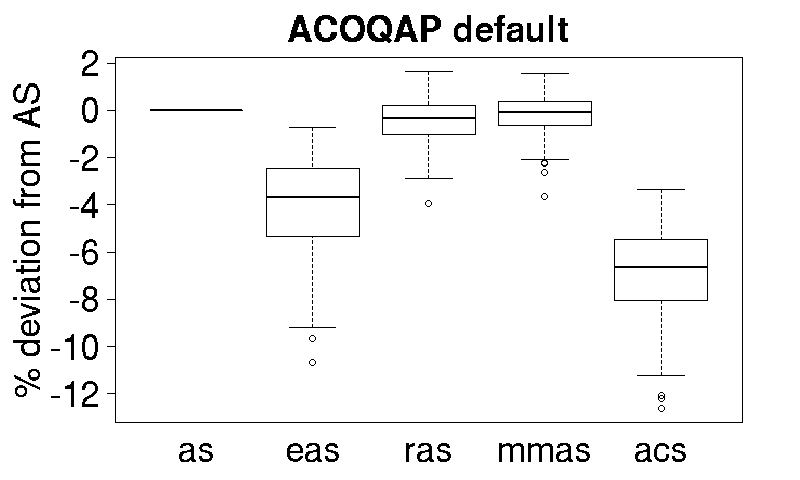

We compared the performance of the algorithms when using its default settings:

|

|

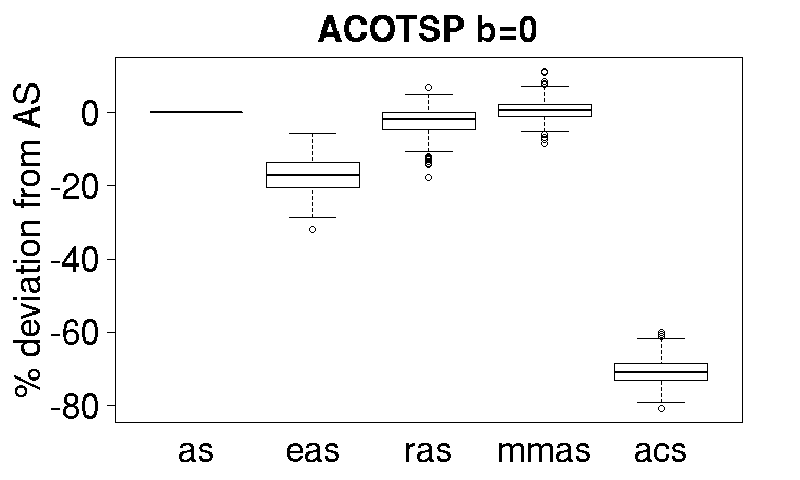

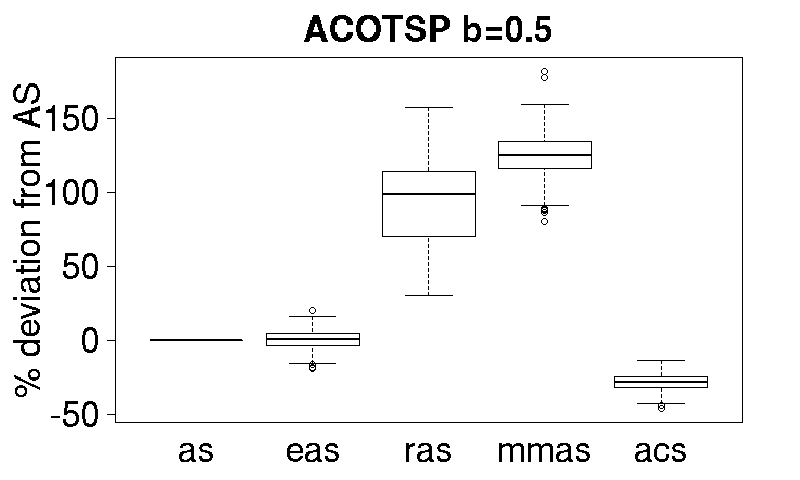

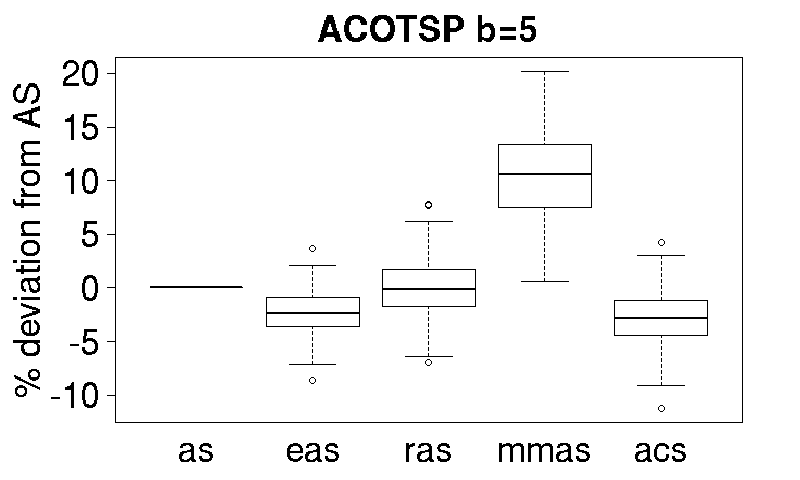

Both tests show a different behaivour of the algorithms, this might be influenced by the fact that ACOQAP does not use heuristic information, and therefore beta=0. For investigate this we perform test using beta={0, 0.5, 5}. Using beta=0 we can see the same behaviour observed in the ACOQAP tests.

|

|

|

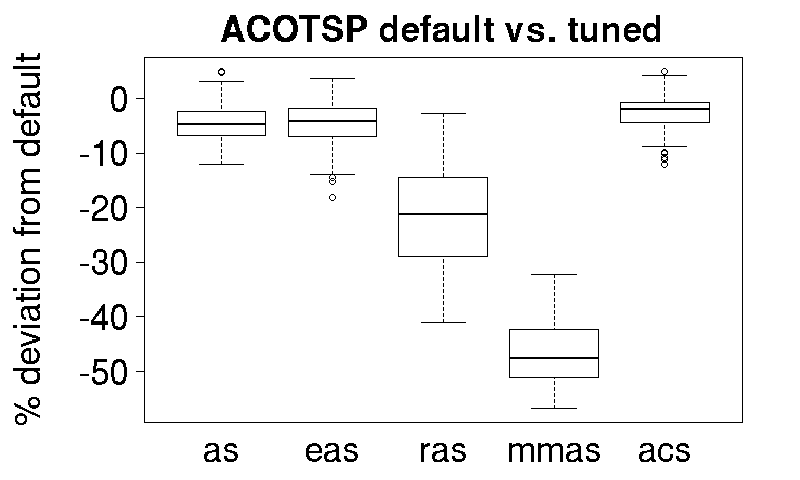

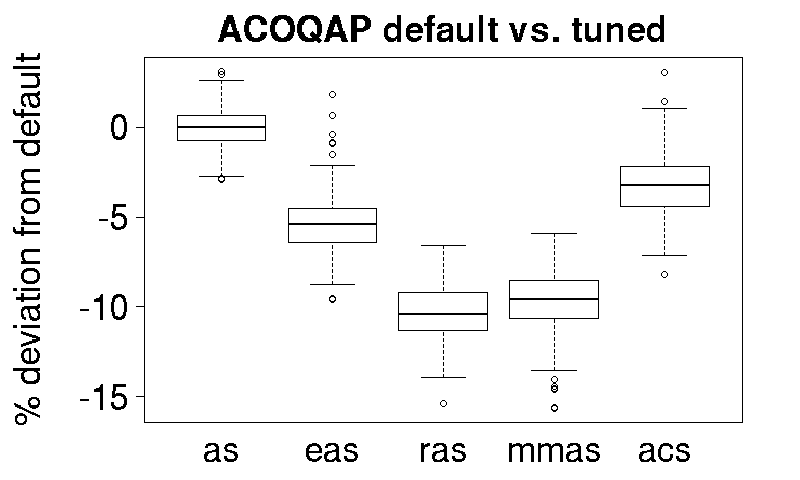

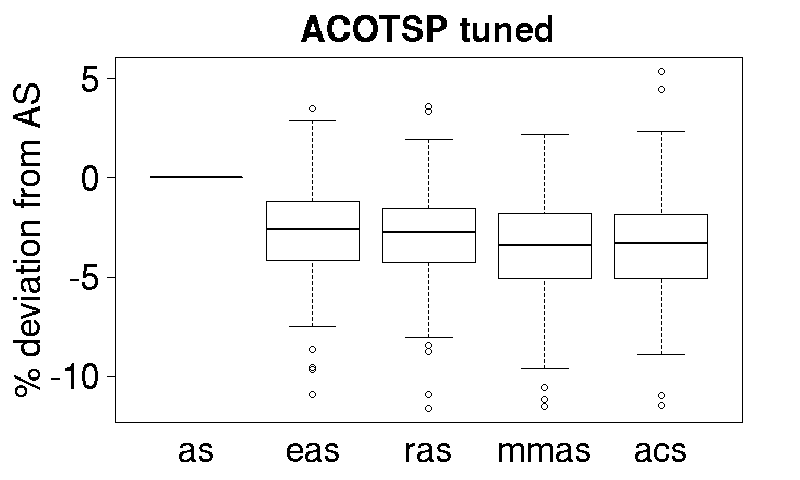

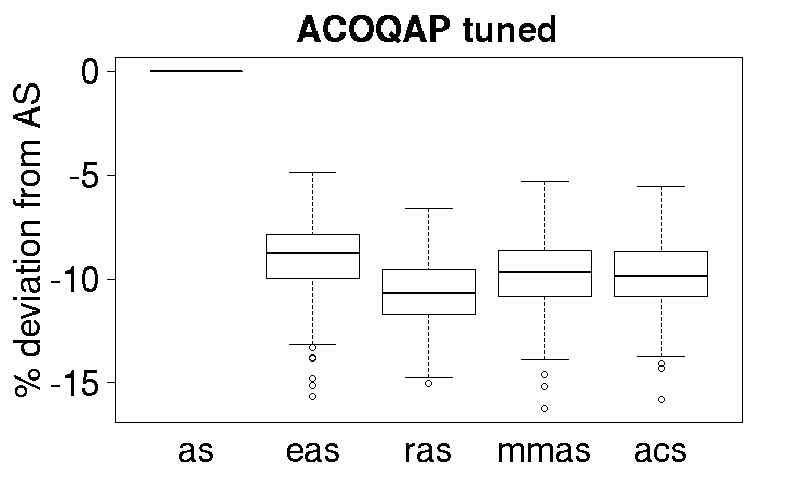

We perform the tuning over the basic ACOTSP and ACOQAP algorithms, first following graphs show the improvement of each algorithm (over 20 executions of irace) compared with the default version. The second set of graphs shows the distance from AS.

|

|

|

|

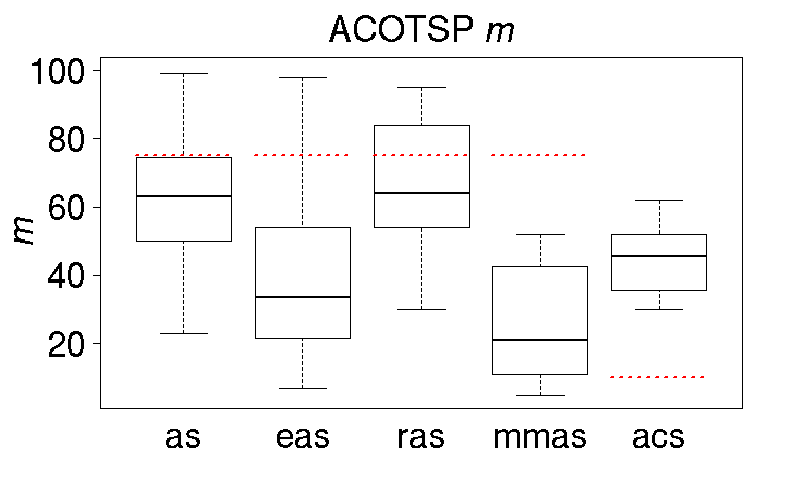

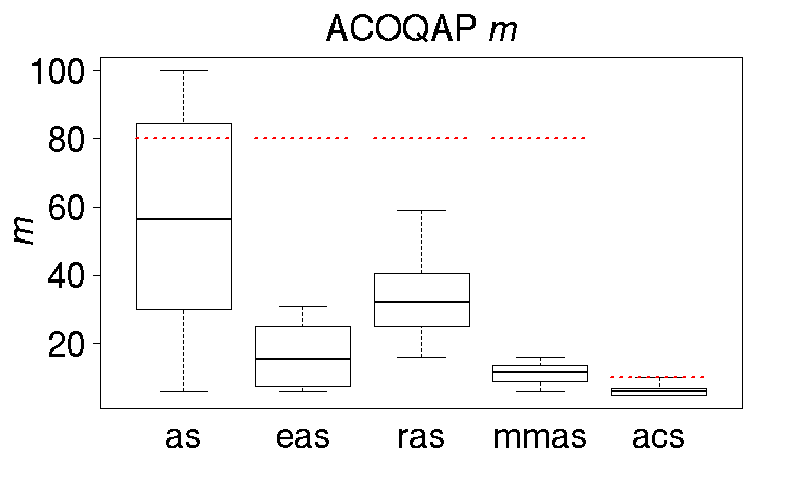

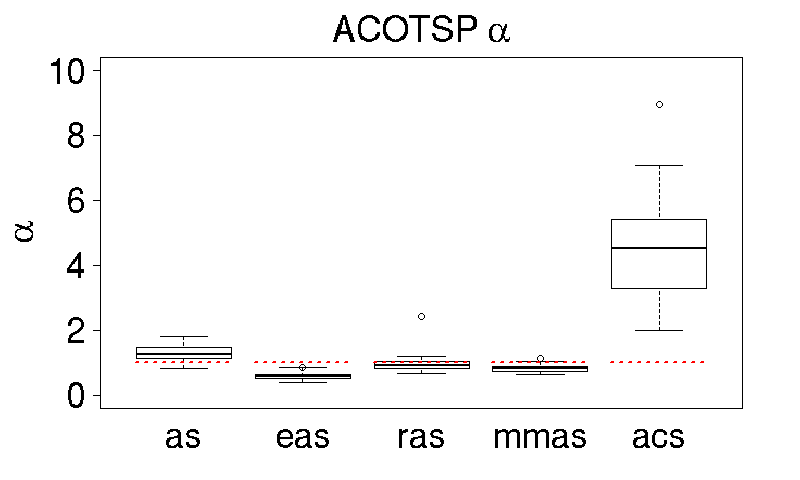

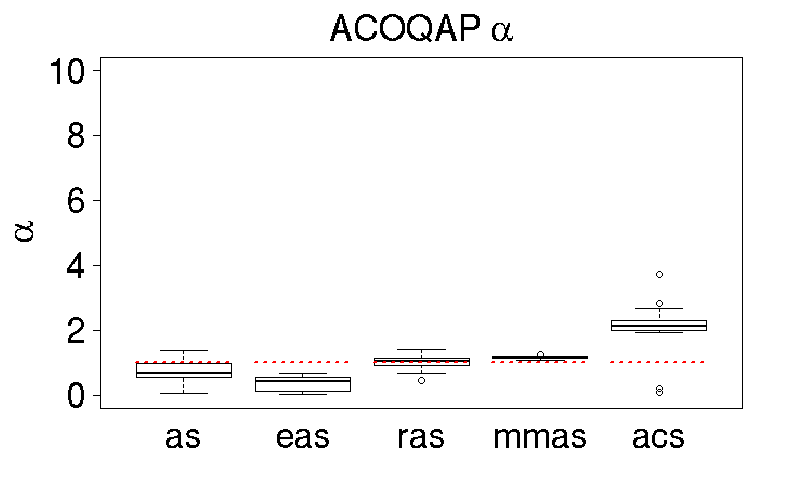

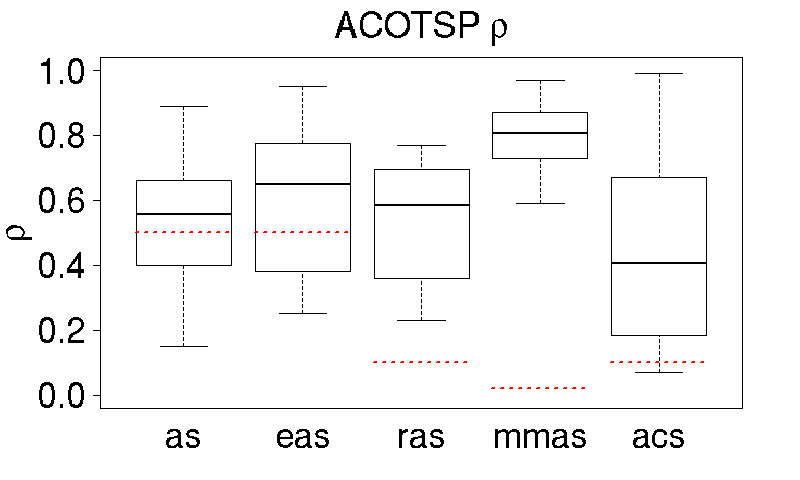

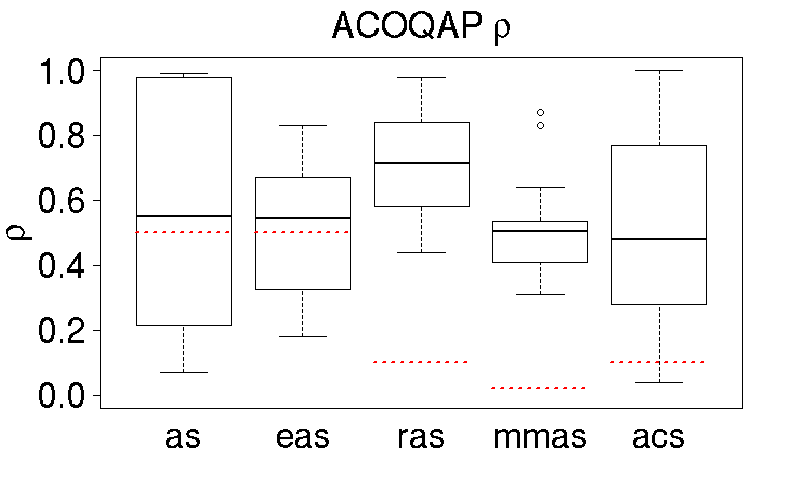

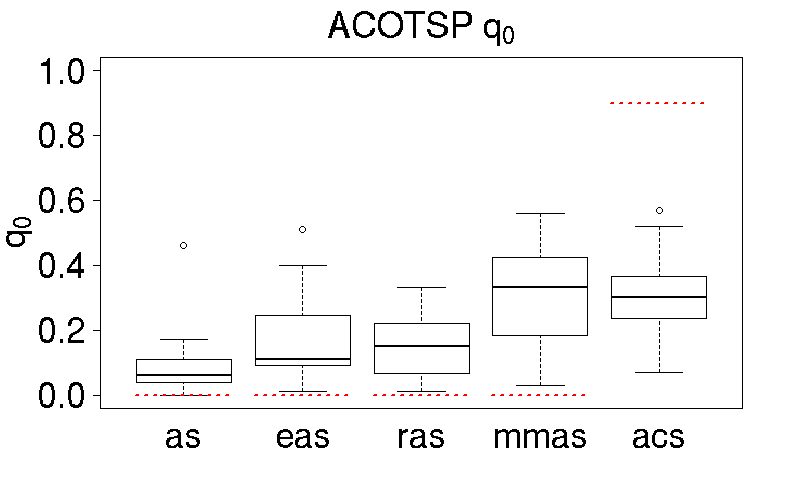

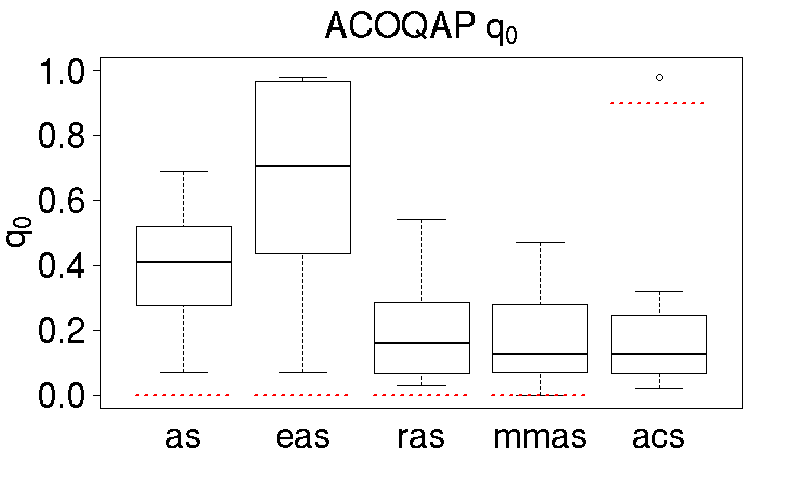

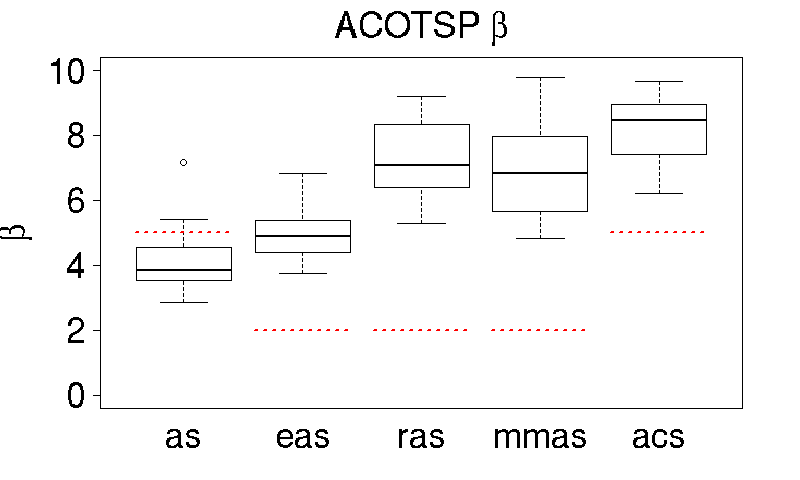

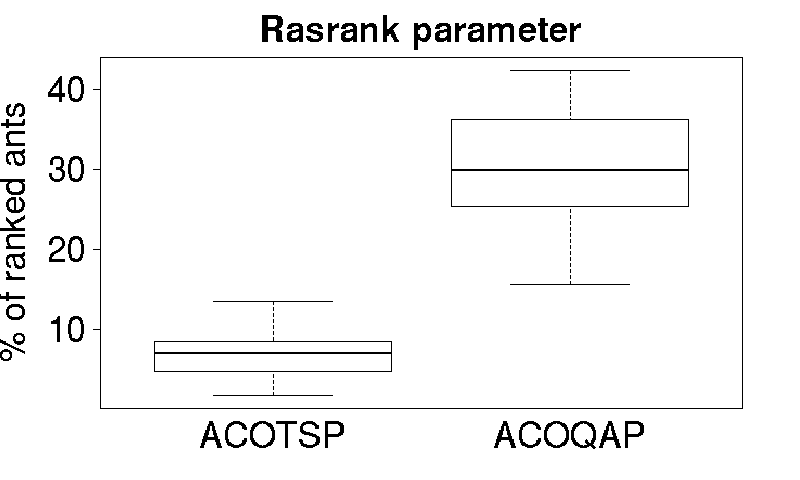

The parameter values obtained are depicted in the following graphs:

|

|

|

|

|

|

|

|

|

|

[1] Dorigo, M., Maniezzo, V., Colorni, A.: Ant System: Optimization by a colony of cooperating agents. IEEE Transactions on Systems, Man, and Cybernetics − Part B 26(1), 29−41 (1996)

[2] Bullnheimer, B., Hartl, R., Strauss, C.: A new rank-based version of the Ant System: A computational study. Central European Journal for Operations Research and Economics 7(1), 25−38 (1999)

[3] Stützle, T., Hoos, H.H.: MAX−MIN Ant System. Future Generation Compute Systems 16(8), 889−914 (2000)

[4] Dorigo, M., Gambardella, L.M.: Ant Colony System: A cooperative learning approach to the traveling salesman problem. IEEE Transactions on Evolutionary Computation 1(1), 53−66 (1997)

[5] López−Ibánez, M., Stützle, T.: Automatically improving the anytime behaviour of optimisation algorithms. European Journal of Operational Research 235(3), 569−582 (2014)

[6] Pellegrini, P., Mascia, F., Stützle, T., Birattari, M.: On the sensitivity of reactive tabu search to its meta-parameters. Soft Computing (In press)